Test Load Balancer(TLB)

Documentation for version:

Concepts in TLB

Components

- A Server : that stores and allows querying of test data (test times/test results etc)

- A Balancer : that partitions and re-orders suite of tests, given a server url

Apart from the 2 components, there is a 3rd concept in TLB called Job

Job represents work that needs to be parallelized using TLB. For example, executing all the unit tests of a project "Foo" is a job as it is work that can be parallized. In TLB, a Job has 2 attributes:

-

Job Name

This uniquely identifies a job in TLB. TLB uses this to store all test data for a given job. In the above example, "Foo-unit-tests" could be set as the name of the job. Then TLB stores all the test run time, failure status etc for the task of running the unit tests parallely under the name "Foo-iunit-tests".

-

Job Version

Typically, more than one instance of the same job can be running simultaneously and TLB needs to give the right partitions to the right instances. Builds 10 and 11 can be running simultaneously with 2 parititions each for example. In this case TLB should not end up using the data posted by build 11 (if it finished faster) to compute partition information for 10. Nor should TLB use the information posted by partition 1 of build 10 in partition 2 of build 10. Consider the following example:

Lets say we have 3 partitions - partitions A, B and C. A may have started running tests and may have already reported result and time for a few tests by the time B and C start. Now, lets say B and C want to TimeBalance and hence want data from the server. However, B and C must balance based on the exact same data that A started out with and not the updated data, which has feedback from A. This means, if a new time data is available of a test from A, that should be used in balancing on B. By doing this, we may end up reruning the same test on B as it is faster. This is vital for the mutual exclusion and collective exhaustion principle that TLB follows. To solve this problem, TLB has a concept of versioning. When A starts running, it posts the TlbServer a version string against which the server stores a snapshot of data thats relevant for the corresponding TLB_JOB_NAME. When B or C queries data using the same version, they get the same data that A got. This ensures that all partitions see the same data, in-spite of server receiving new data continuously. Usually TLB_JOB_VERSION is set such that it changes between suite-runs. For instance, build number can be used as TLB_JOB_VERSION. In this case, A, B and C may all be running at version 10. Using a unique version ensures the frozen (hence stale) data is not used for balancing/ordering the new run of the same test suite. When the next build is triggered all three partitions start with the corresponding build number, which may be 11, hence the frozen snapshot of data from version 10 is not used. As it can be seen, job versions are important. We advice every Job have an associated job version. Job versioning may be pretty intimidating to understand initially. However, as you start using TLB regularly, you will be able to appreciate the need for Job version.

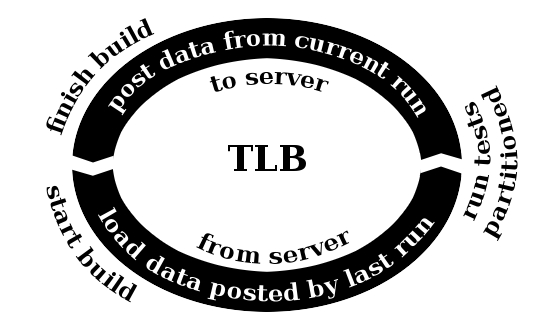

Interaction between the Server and the Balancer while TLBing

Figure 1: Pictorial expression of aforementioned interaction between Server and Balancer, to show where Server and Balancer fit in the entire act of load balancing.

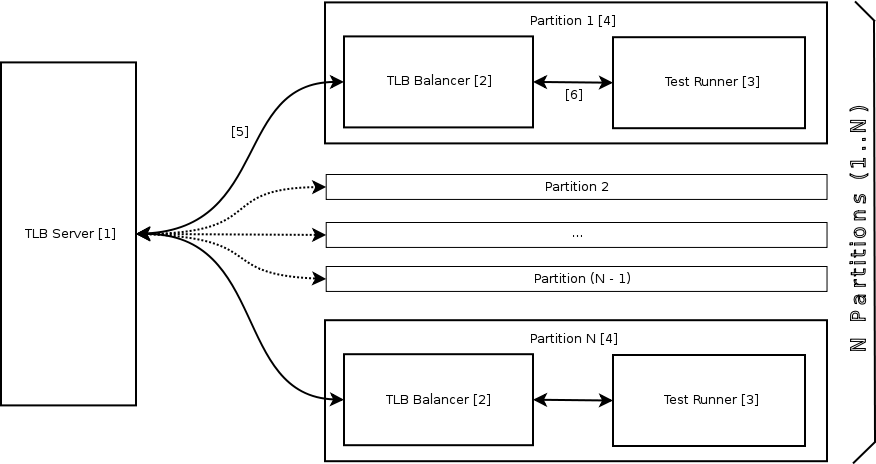

Typical TLB setup

Figure 2: Typical TLB setup for a JVM based project

The above diagram shows how a typical TLB setup looks like.- TLB Server: Included file 'already_covered' not found in _includes directory

- TLB Balancer: Included file 'already_covered' not found in _includes directory

- Test Runner: This is the runner that actually runs the tests. TLB does take the responsibility of running tests. That is still handled by the underlying framework i.e. the Test Runner. Example: JUnit, RSpec, Test::Unit, NUnit etc.

- Partitions: These are the parallel machines/VMs/processes/threads that run the same build task. With TLB hooked in, each of these partitions execute mutually exclusive sets of tests. This is also responsible for kicking-off the build so that the test framework and TLB are started up. Typically the machines that execute test-task(s)(in-turn partitions) in a CI environment, are agents from build grid machines or build farm of a CI/build server.

- Server - Balancer communication: Balancer posts test-related-data about current test run to the server and obtains historical data when it is trying to balance and re-order.

- Balancer - Test Runner communication: Before a test runner starts executing tests, TLB gets a callback with the original list(which includes all the tests). This will be same across all the partitions. TLB executes the same partitioning algorithm in each partition, and selects the subset corresponding to the given partition number, and passes the subset on the the test-runner. Thus, the test-runner(in each such partition) ends up executing a smaller set of tests. Most test runners provide a mechanism to hooking up listeners that are posted notification about test execution status/life-cycle. Using this, TLB gets information about test run-times and outcome(result). This is what gets posted back to the TLB Server.

Balanced test-execution events on time-line

Figure 3: Balanced test-execution events on time-line

Figure 3: Balanced test-execution events on time-line

- Splitter: Which takes care of splitting(making subsets of) the given list of tests using given criteria[ Splitter/Balancer Criteria Configuration ].

- Orderer: Which takes care of re-ordering the tests based on a given criteria[ Orderer Configuration ].

TLB server[ http://github.com/test-load-balancer/tlb ]:

Server is bundled in both tlb-server-gXXX.tar.gz and tlb-complete-gXXX.tar.gz archives and can be obtained from the download page. TLB server comes packaged as tlb-server jar (a java archive that carries all dependencies that server requires). You can use the "server.[sh|bat]" scripts to manage the server process(these scripts are bundled in the distribution). Alternatively you can directly invoke:

$ java -jar tlb-server-gXXX.jar

(substitute the corresponding version/revision of jar used).

TLB server binds to port[1] 7019. Once the server is up, partitions that are to be balanced(balancer instances), can be pointed to it by setting the environment variable [2] to the base url of the TLB server. Balancer works using an abstraction called Server, and TlbServer[3] is an implementation of this contract that comes packaged in TLB.

Or

Go server[ http://www.thoughtworks-studios.com/go-agile-release-management ]:

TLB has inbuilt support for Go, which means TLB can balance against Go just like it balances against the TLB-Server. Running against Go obviously means the tests are run as part of a Go-Task, which will run on a Go-Agent. Additionally, because TLB is environment aware, it can implicit a few things while running against Go server. It deduces equivalent of things like job-name[4], version[5] and total partitions[6] from the way jobs are configured under stage and pipeline. To make TLB work with Go, Server needs to use GoServer[3]. In this case, you do not need to run a separate process(TLB Server) to act as server, because Go-server plays that role. This does not need any change in the go-server or go-configuration apart from the naming convention your Go job-names need to follow. The convention is that they need to be of the form "<my-job-name>-X"(where X is a natural number 1..n, when you want to make n partitions), or "<my-job-name>-<UUID>"(where each such job will be made to execute only one partition). TLB has an abstraction called Talk to service. This is responsible for enabling TLB to talk to the remote Server component. TLB uses this abstraction to download test-results, test-times etc from this repository and to publish the run-feedback back to the repository (which is used by future builds). TLB is going to continue introducing such abstractions as it evolves, because this style lends itself to enormous flexibility and configurability, and allows us to provide useful options at every level that user can choose from. In addition to this, it allows users to write their own implementation for these abstractions, hence allowing easy plugability and extensibility.- can be overridden, read TLB_SERVER_PORT in Configuration Variables

- read TLB_BASE_URL in Configuration Variables

- read TYPE_OF_SERVER in Configuration Variables should be set to the fully qualified name of the implementation

- read TLB_JOB_NAME in Configuration Variables

- read TLB_JOB_VERSION in Configuration Variables

- read TLB_TOTAL_PARTITIONS in Configuration Variables

- read TLB_PARTITION_NUMBER in Configuration Variables

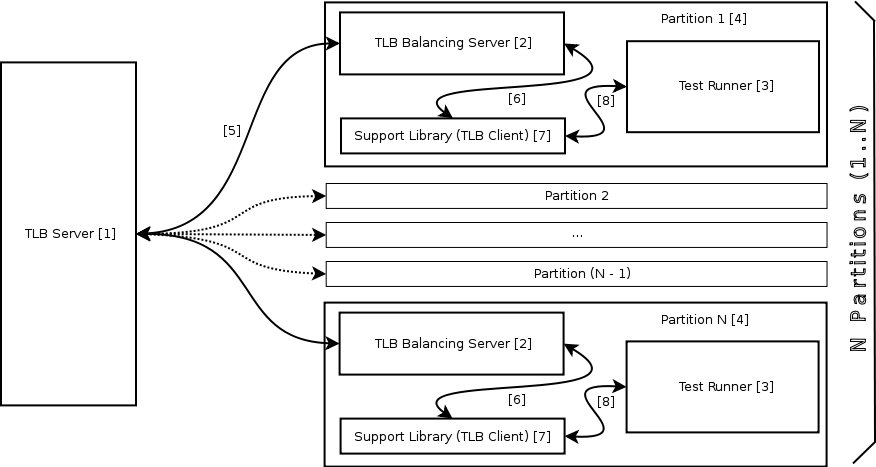

Figure 4: Typical TLB setup for a non-Java/non-JVM project

TLB supports projects non-Java/non-JVM languages by making the Balancer available as a first class process, which is an HTTP server. A thin language specific library takes care of hooking up with the build tool to allow pruning 'to-be-run' tests list and also attaches a test-listner with the testing framework. These hooks in turn are just wrappers that make HTTP request to the local Balancer server(with plain text payload, the response to which has plain-text payload too). Once the request reaches the balancer, the regular algorithms kick in, and it goes through the same flow as Java support does. Since all infrastructure except some glue code is reused, implementing support for a new languages or frameworks is just a matter of spawning the balancer-process and having the support library talk to it over HTTP.- TLB Server: Already covered in previous sections.

- TLB Balancer Server: This is the standalone Java process that exposes its services over HTTP. This server is actually a balancer, which is capable of partitioning given list of test names, and is capable of reporting test-times and test-results back to the TLB Server. This is all that the language specific glue-code library needs to talk to, the actual TLB server is abstracted away.

- Test Runner: This is the runner that actually runs the tests. TLB does take the responsibility of running tests. That is still handled by the underlying framework i.e. the Test Runner. Example: JUnit, RSpec, Test::Unit, NUnit etc.

- Partitions: These are the parallel machines/VMs/processes/threads that run the same build task. With TLB hooked in, each of these partitions execute mutually exclusive sets of tests. This is also responsible for kicking-off the build so that the test framework and TLB are started up. Typically the machines that execute test-task(s)(in-turn partitions) in a CI environment, are agents from build grid machines or build farm of a CI/build server.

- Server - Balancer communication: Balancer posts test-related-data about current test run to the server and obtains historical data when it is trying to balance and re-order.

- Balancing Server - Support Library communication: This is the interaction between the Balancer server and the Support Library. Support library makes HTTP requests to the Balancer Server. Support Library posts the list of the tests and gets back a pruned and reordered list from the Balancer server. It also posts the test data(result and time) to the Balancer server, and gets an acknowledgment.

- Support Library: This is the platform/framework/language specific library that hooks up with the build-tool and test-runner of the subject environment, and is responsible for launching, talking to the Balancer Server over HTTP, and tearing down the Balancer Server. This is what in some instances in this documentation is referred to as glue-code. This is the only component that needs to be written language that needs to be supported.

- Test Runner - Support Library communication: Before a test runner starts executing tests, TLB gets a callback with the original list of all the tests. This will be(note: must be) same across all the partitions. TLB(Balancing Server) executes the same algorithm on each partition(identified by partition number), and returns the correct pruned and reordered subset of tests to the Test Runner. Hence, the Test Runner ends up executing only a smaller set of tests, taking way lesser time compared to executing the whole suite serially. Most test runners provide a mechanism for hooking up listeners that are notified of test-state as tests execute. The glue-code library uses test-listeners to capture test run times and result and posts it to the Balancing Server, which in-turn, pushes it to the actual TLB Server(or equivalent).